Build smarter AI with RAG (Retrieval-Augmented Generation) as a Service. We connect your LLMs to private data to deliver accurate, secure, and context-aware responses; perfect for AI chatbots, internal knowledge systems, and enterprise search.

Our RAG as a service offering are designed to introduce you with the capabilities of Retrieval-Augmented Generation. It includes data ingestion to deployment as our expertise. So, lets understand each service in detail.

Our AI developers evaluate your content, data sources, use cases, and user requirements. They even advise on architecture, retrieval designing, embedding strategies, vector datasets, indexing, and generation workflows to match your performance, cost, and latency goals.

We undertake development of pipelines for ingestion, cleansing, and embedding your documents, databases, APIs, or knowledge graphs. No matter where you store your content (PDFs, internal wikis, web pages, or structure records), we help you convert them into formats ready for RAG.

If you are worrying about scalability or query loading, we have got your back. Our RAG services provider help you integrate scalable vector datasets like pinecone, Milvus, chroma, and Weaviate. Besides, we even configure efficient retrieval strategies like ANN search, hybrid search, filtering, similarity thresholds for the same.

Count on our RAG developers for swift connection of your retrieval results with Large Language Models like OpenAI, Anthropic, and LLaMA. We also undertake designing prompt templates, chaining logic, reranking, and post-processing to produce high-quality and relevant responses.

Where needed, we fine-tune or adapt base models (or lightweight adapters) to your domain, injecting domain knowledge, style, tone, brand voice, or compliance constraints.

We continuously establish feedback loops like user corrections, logs, and performance metrics fed into retraining or prompt updates. With the time, you RAG system becomes intelligent, precise, and robust.

Count on us for seamless deployment of your RAG application, integrate with your systems, track performance, manage latency and scaling, and keep your knowledge updated regularly.

If you are worries about security, access control, or governance, then stay relaxed. We help designing role-based access, filtering, audit trails, content validation, and compliance layers to ensure access to permitted members only.

Connect with our RAG (Retrieval-Augmented Generation) experts to explore how your business can turn private data into intelligent, accurate, and efficient AI experiences.

Let’s design a solution that enhances your AI workflows with real, reliable knowledge.

Open AI Embeddings

Sentence Transformers

Cohere Embeddings

InstrutorXL

LangChain

LlamaIndex

Haystack

Semantic Kernel

AWS

Azure Function

Docker

Weights & Biases

Apache Airflow

LangChain Document Loaders

Unstructure.io

Tesseract

LangSmith

Helicone

WhyLabs

Prometheus

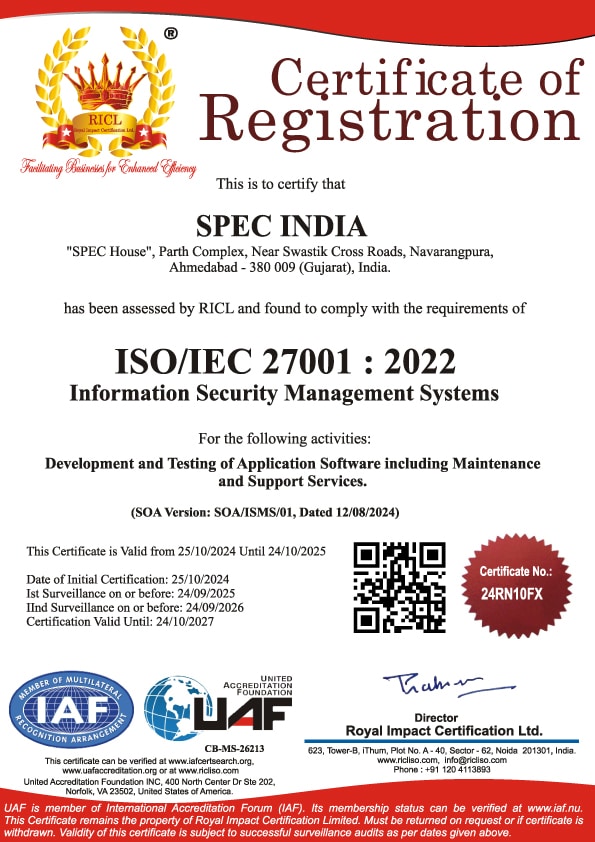

When you choose the right RAG, it clears the differences between a prototype and a production-ready solution. At SPEC INDIA, we combine technical excellence, industry experience, and customer -first mindset to offer RAG systems that are reliable and accurate.

We, as a RAG services provider, undertake executing strategy to architecture design, tracking, and deployment. Our team is well-versed in LLMs, embeddings, vector datasets, and orchestration frameworks to deliver production-ready solutions tailored to your business requirements.

SPEC INDIA has been client’s go-to software development company and cutting-edge technology integrator. With varied experience in developing enterprise-level software solutions, we are a proud partner of Fortune 500 companies as well. Talking about our RAG implementation, they are backed by success stories wherein we have witness client’s achieving accuracy, efficiency, and user experience at a scale.

When it comes to transparency, we, a leading RAG as a service provider, ensure maintaining fairness and clarity. We undertake RAG solution optimization to maintain its cost-effectiveness and enhanced performance. Besides, our flexible models even help you manage compute, vector storage, and API usage without hidden surprises.

Your data protection and security is our top-most priority. Our developers ensure every RAG systems including secure ingestion pipelines, role-based access control and compliance-ready deployments.

RAG systems are meant to be improved with time, refinement, and feedback. Our partnership extends beyond deployment, which includes tracking, updating, and enhancing your system to cope up with fresh data, ever-evolving user requirements, and AI advancements.

No matter which engagement model you choose amongst dedicated team, project-based, or long-term partnership, our developers would adapt to your business methodologies. With our engagement models, you get freedom to scale resources, timelines, and investment-based on your goals.

Are you ready to transform how your users interact with knowledge data? Let’s design a Retrieval-Augmented Generation solution that ensures accuracy, agility, and bond. Connect with us today to explore a tailored roadmap for your business.

Get My RAG Consultation

Your business demands way beyond a simple question-answering machine; our RAG-backed smart chatbots and virtual assistant extract information from your trusted data sources only to offer context-supported and accurate answer to your customers. Partner with us to build and deploy intelligent assistants that not only understands your business but also reduce hallucinations and offer humanized conversations that are personalized to each user.

Your users must be suffering frequently from search results appeared through traditional knowledge bases. RAG enables transformation of statis repositories into conversation, dynamic systems that offer accurate summaries and responses. Our team combines your wikis, manuals, and documents into a RAG-backed solution that boosts staff productivity and knowledge accessibility.

RAG even resolves queries timely by retrieving relevant information and generating accurate responses. Connect with us to build user-centric assistants to cut short resolution time, build trust, improve satisfaction, and reduce workload on human support time.

Our RAG solutions allow you customers to ask questions in natural way and get context-based responses for everything from R&D reports to legal contracts. Our software developers can even create retrieval pipelines that can process and index your unstructured documents to offer your users with quick and dependable Q&A experiences.

RAG improves search by producing customized summaries and comparisons in addition to retrieving pertinent documents. We assist you in putting domain-adapted generation and hybrid retrieval strategies into practice so that your users can quickly and thoroughly explore large, complicated datasets.

Analysts and executives require more than just raw data; they require insights. RAG empowers decision-making by grounding generated recommendations in real business data. Together, we create domain-aware, safe systems that synthesize reports, identify patterns, and deliver reliable, decision-ready outputs.

Discover the diverse range of industries we proudly support with our innovative software solutions to companies of different business verticals. Our expertise spans multiple sectors, ensuring tailored services for every unique need.

You can leverage RAG to build smarter chatbots, faster knowledge discovery, reliable customer support, and decision-ready analytics. By implementing AI outputs in your real data, it even eliminates hallucination, builds trusts, and improves efficiency across internal and user-centric apps.

No, RAG works with both small and voluminous databases. It initiates by integrating a subset of your document, such as FAQs, manuals, or policies and scale up as your requirement grows. Our in-house team designs pipelines that ensures everything is handled carefully including PDFs to enterprise-level datasets.

Our top-notch priority remains data privacy and governance. Our RAG solutions include secure ingestion pipelines, role-based access controls, compliance-ready deployments, and tracking to ensure your sensitive information is protected and only permitted user gets it access.

You can expect proof-of-concept in 3-4 weeks depending upon the complexity of your use case and data sources. Once the POC is ready, we take it to the production-ready solution along with ongoing monitoring, fine tuning, and support to ensure long-term success.

While no systems guarantee zero hallucinations, RAG strongly stands by reducing them by grounding answers in your trusted knowledge sources. We also add validation, filtering, and feedback loops through which you system becomes smarter and reliable.

SPEC House, Parth Complex, Near Swastik Cross Roads, Navarangpura, Ahmedabad 380009, INDIA.

This website uses cookies to ensure you get the best experience on our website. Read Spec India’s Privacy Policy