Pentaho Data Integration (PDI): A Guide to Enterprise-Grade ETL

UpdatedNovember 24th, 2025

Raw data carries immense information that decides the future of business and competitiveness. Enterprises nowadays are under pressure to transform raw data into actionable insights. According to IDC, the global volume of data is expected to reach 175 zettabytes by 2025, which is up from 64 zettabytes in 2020. This massive growth has made data integration one of the most critical factors in the modern information technology environment.

Yet, several organizations still struggle with data silos, inconsistent formats, and performance bottlenecks that hinder the decision-making process. That’s one of the core reasons why the global ETL market is forecasted to grow to USD 29 billion by 2029.

Enterprises seeking reliability and flexibility opt for Pentaho Data Integration. It features a drag-and-drop interface, support for complex transformations, and seamless integration across databases, big data platforms, and cloud services. If you are looking to build robust and maintainable data pipelines, PDI enables you to do so.

This guide is specifically designed for you if you want to know:

- What and why use PDI

- Key features and architectures that make PDI superior to other ETL tools

- Real-world use cases and case studies

- How your business can implement PDI for scalability, compliance, and ROI

Suppose you are a CIO, CTO, Data Architect, & BI Leaders. In that case, this resource will help you evaluate whether PDI aligns with your organization’s data strategy, governance needs, and digital transformation goals.

What is Pentaho Data Integration?

It is a data integration and ETL component of the broader Pentaho business analytics platform. It was initially developed as an open-source platform, which Hitachi Vantara later took over. Organizations looking to analyze and report should definitely opt for PDI, as it helps with consolidation, cleansing, and preparing large volumes of data.

It helps data engineers and business intelligence teams, who are non-tech professionals, prepare workflows without having to write a single line of code. Enterprises seeking ease of use and enterprise-grade scalability would appreciate enjoying PDI’s benefits.

Key Capabilities of PDI

- Comprehensive Connectivity

It connects to relational databases, flat files, SaaS platforms, APIs, significant data clusters, and cloud storage.

- Flexible Processing Modes

It supports both batch ETL (scheduled data movement) and real-time data integration.

- Data Transformation Engines

It helps in cleansing, enriching, and harmonizing raw data into business-ready formats.

Seamless connectors for Hadoop, Spark, and other distributed platforms for handling petabyte-scale datasets.

- Metadata Injection & Reusability

It helps reduce workflows by enabling parameterized workflows and reusable templates.

- Enterprise Ready Deployment

It enables horizontal scaling through clustering and is integrated with Pentaho’s business analytics suite for reporting and dashboards.

PDI is entirely different from other tools since it consists of end-to-end data pipeline orchestration. With integration possible in data warehouses, data lakes, and BI platforms, it helps businesses establish a single version of truth for decision-making.

So, that was a simple understanding. Now, we will reveal the true potential, which is why enterprises prefer PDI.

Why Enterprises Need an ETL Solution like Pentaho Data Integration (PDI)?

Enterprises are packed with data, which includes customer interactions, financial transactions, IoT device feeds, and cloud application memory. Each data has its own format, velocity, and quality. These data can lead to inconsistency, compliance risks, missed opportunities, or data silos if not handled under a robust integration layer.

Common Challenges Enterprises Face While Managing Data

Every business has different departments that store data in isolated systems. Building a unified view of operations or customers becomes difficult under such circumstances.

Not only are isolated systems, but varying data formats can also be challenging, as they can cause errors or duplications when analyzed.

As business operations scale, manual or semi-automated processes often break down due to the volume of records.

Decision-making processes demand data for even milliseconds (real-time), which, due to traditional batch processes, often gets delayed.

Industries like healthcare, finance, legal, and telecom require secure, auditable, and policy-compliant data integration.

The Business Case for Enterprise-Grade ETL

PDI enables data validation, cleansing, and transformation, which helps executives to make insight-based decisions.

Responding quickly to evolving market conditions is essential for any business, and PDI enables enterprises to process real-time streams and batch data automatically.

PDI has an in-built scalability feature that enables peak performance even if the data increases with demand.

Another significant benefit of PDI is the reduction of time and resources dependency, which eventually decreases the total cost of ownership as compared to custom-coded pipelines or legacy ETL systems.

Built-in audit trails, role-based access, and integration with enterprise security frameworks enable organizations to meet compliance standards, including GDPR, HIPAA, and SOX.

Struggling with Complex Data Workflows?

Our Pentaho experts simplify data integration challenges and deliver scalable, enterprise-grade ETL architectures that grow with your business.

PDI Architecture

If you are looking to build a strong data integration platform, then you must have a scalable and flexible architecture. The one that doesn’t resist processing voluminous data while leveraging the technology stack. PDI features a modular architecture that efficiently handles enterprise workloads.

Core Building Blocks: Transforming and Job

There are two key components of PDI:

Transformation

- Determine the steps for extracting, cleaning, transforming, and loading data.

- There is a set of transformations that are called a pipeline of steps connected by data flows. (for example, input from a database, apply transformation rules, output to a data warehouse).

- Allows the same transformation across multiple datasets and is designed for parametrization and reusability.

Jobs

- Offers control layers for enterprise automation.

- Used for workflow management.

- Demonstrates several transformations and sub-jobs. And external processes.

Transformation manages the “how” of data processing, and jobs manage the “when and in what order.”

Deployment Flexibility

Based on the IT strategy, the business can deploy PDI in different environments. For example,

- On-Premises: installed within the corporate data center for organizations with confidential and regulated data.

- Cloud-Native: Deployed on public or private cloud platforms (AWS, Azure, GCP) with support for containerization (e.g., Docker, Kubernetes).

- Hybrid: Combines on-premise and cloud pipelines, enabling gradual modernization without disrupting existing systems.

Scalability & Performance

- Clustering: By executing transformations across several clustered nodes, PDI facilitates distributed execution and guarantees faster processing of big datasets.

- Load Balancing: For optimal performance and resilience, workloads can be distributed among servers.

- Parallel Processing: For concurrent execution, data streams can be divided into several threads.

Integration with Pentaho and Third-Party Tools

- Pentaho BA Suite: Pentaho Business Analytics is natively integrated for self-service BI, dashboards, and reporting.

- External BI Platforms: Able to import data for visualization into Qlik, Power BI, or Tableau.

- Enterprise Scheduling & Orchestration: Works with advanced job orchestration tools like enterprise schedulers, Oozie, and Apache Airflow.

Tracking & Management

- Centralized monitoring, logging, and error handling are made possible by Pentaho Enterprise Console, which is accessible through the enterprise edition.

- Allows for proactive failure or bottleneck resolution and gives insight into pipeline performance.

PDI vs Other Enterprises ETL Tools

| Feature/Criteria |

Pentaho Data Integration (PDI) |

Informatica PowerCenter |

Talend Data Integration |

Apache NiFi |

| Licensing & Cost |

Open-source (community edition) + affordable enterprise edition |

High license and maintenance costs |

Open-source + subscription-based enterprise edition |

Fully open-source |

| Ease of Use |

Drag-and-drop GUI, minimal coding required |

Complex interface, requires trained developers |

GUI-based but steeper learning curve than PDI |

Flow-based interface, simpler but less ETL-focused |

| Connectivity |

Wide connectors: RDBMS, NoSQL, APIs, cloud storage, SaaS apps |

Extensive connectivity, strong enterprise legacy system support |

Extensive connectors, especially for cloud-native systems |

Strong support for streaming and IoT data |

| Big Data & Cloud Support |

Built-in support for Hadoop, Spark, AWS, Azure, GCP |

Available, but with add-ons and higher licensing costs |

Strong integration with big data and cloud platforms |

Native streaming and real-time ingestion, with less batch ETL focus |

| Scalability |

Clustered execution, load balancing, cloud-native deployments |

Enterprise-grade scalability, but expensive |

Highly scalable with an enterprise subscription |

Scales well for event streaming, less suited for heavy transformation logic |

| Data Transformation |

Rich transformation steps, metadata injection, reusable templates |

Very powerful but requires deep expertise |

Flexible with strong customization |

Lightweight transformations are not as robust for complex ETL |

| Compliance & Security |

Role-based access, audit logs, and enterprise security integration |

Strong enterprise security and governance |

Strong compliance and data governance support |

Basic security features require external add-ons |

| Deployment Options |

On-premise, hybrid, and cloud-native (Docker/Kubernetes support) |

Primarily on-premise, with cloud available at a higher cost |

On-premise, cloud, and hybrid |

Cloud-native and on-premise |

| Best Fit For |

Enterprises seeking cost-effective, scalable ETL with ease of use |

Large enterprises with a budget for premium vendor support |

Organizations with cloud-first strategies and open-source culture |

Companies prioritizing real-time streaming and IoT data |

Interesting to Read: Pentaho vs Talend: A Head To Head Comparison

Implementing Pentaho Data Integration in an Enterprise Environment

Pentaho data integration is not simply an installation-based software; it requires full-scale deployment. It involves aligning business goals, technical requirements, and governance practices to ensure seamless integration. We have outlined the best practices to guide businesses through effective implementation.

Step-by-step Adoption Roadmap

Gather Data Integration Requirements

- Evaluate your business goals: Customer analytics, cloud migration, regulatory reporting, etc.

- Map data sources, target systems, and formats.

- Define performance requirements (batch vs real-time, data volumes, and latency).

Install & Configure PDI

- Select between the enterprise edition and community edition.

- Configure repositories for storing transformations and jobs.

- Establish security roles and access permissions in accordance with enterprise policies.

Build Pilot Workflows

- Initiate with a minimal yet impactful use case.

- Validate transformation, output consistency, and transformation.

- Set performance marks to evaluate tuning needs.

Scale Across Departments & Systems

- Enabling automation by integrating with enterprise scheduling/orchestration.

- Make templates and transformation logic consistent.

- Slowly and gradually extend your workflows to accommodate increasing data sources and teams.

Operationalize with Tracking & Governance

- Make sure to document workflows for audit purposes and compliance.

- Establish SLAs for accuracy, delivery, and data freshness.

- Use centralized tracking.

Advanced Capability of PDI

Pentaho data integration (PDI) is a renowned ETL tool with capabilities that extend beyond simply moving and transforming data. PDI enables enterprises to transform digitally, ensure compliance and regulatory adherence, and drive AI-driven initiatives.

Data Migration & Modernization Projects

- Simplifying the process of legacy data warehouses migration to modern-age platforms like BigQuery, Snowflake, and Azure Synapse.

- Enables schema evolution, data cleansing, and bulk movement while minimizing equipment downtime.

- Enables seamless transitions during system upgrades, mergers, or cloud adoption strategies.

Data Warehouse Automation

- Automates repetitive tasks like dimension loading and incremental updates.

- Offers reusable templates to fasten up data mart and warehouse builds.

- Reduces human errors and upgrades consistency in large-scale data warehouse operations.

Master Data Management Support

- Enhances organization-grade data governance and data quality initiatives

- Identifies duplicates, implements data validation rules, and standardizes records across multiple systems.

- Assists businesses in creating a single, trusted view of enterprise master data.

Data Preparation for AI/ML Workloads

- Enables integration with data science platforms to feed a ready-to-use database.

- Cleanses data for AI/ML models to ensure consistent quality for training and prediction.

- Allows feature engineering pipelines that transform raw enterprise data into model-ready inputs.

Cloud-Native Integration

- Allow hybrid deployments so that businesses can implement on-premise and cloud-based data swiftly.

- Provides connectors for AWS, Microsoft Azure, and Google Cloud.

- Offers flexibility for enterprises implementing a cloud-first or multi-cloud strategy.

Real-Time & Event-Driven Processing

- Allows streaming data ingestion from IoT devices, messaging queues, and APIs.

- Implements event-driven architecture, which is quite crucial for real-time fraud detection and operational intelligence.

Orchestration with Enterprise Scheduling Tools

- Enables error handling, retry mechanism, and conditional logic for robust automation.

- Enables integration with Apache Airflow, Oozie, and enterprise schedulers to ensure seamless large-scale workflows.

Extensibility & Customization

- Developers can extend PDI with JavaScript, Java, or plugins, allowing custom transformations or connectors.

- Enterprises can tailor PDI to their unique business rules and industry-specific requirements.

Cost, Licensing, and ROI of Pentaho Data Integration

Any business considering ETL platforms should be evaluating its cost, licensing, flexibility, and measurable returns on investment (ROI). Compared to other ETL solutions for enterprises, PDI is quite budget-friendly, with no steep licensing fees.

Licensing Models: Community vs Enterprise Edition

This one is free and open-source, making it suitable for small-scale deployments, proof-of-concepts, or departmental use. It provides core ETL functionality, transformation, and connectivity. However, the only shortfall of this edition is that it lacks advanced monitoring, clustering, and enterprise-level support.

This one offers a paid subscription, which includes features like:

- Enterprise console for tracking and management.

- Clustering, load balancing, and scalability enhancements.

- Security and governance capabilities for compliance-heavy industries

The Enterprise Edition sets pricing based on company size, deployment type, and support packages.

PDI does not aim to burn a hole in your wallet; instead, it ensures that it provides maximum benefit at the lowest price. Here is how it helps:

You can always opt for the community edition to try and test, and then switch to the enterprise edition after experiencing the benefits.

- Reduced Development Effort

You can easily drag-and-drop workflows to speed up the delivery process and reduce the dependency on core ETL coding skills.

- Infrastructure Flexibility

With PDI, you do not need to depend on a single vendor since it runs on commodity hardware or cloud environments.

Since PDI offers reusable workflows, templates, and metadata injection, it reduces repetitive development work.

ROI of Pentaho Data Integration

Since there is an investment, there will always be a fixed question: when will I get paid back, or how quickly? To understand this, you must know how ROI is realized:

Accelerating Time-to-Insight

With automation in preparing and delivering reports and dashboards from weeks to hours. Your business gets more time in decision-making rather than just entering data manually.

Improved Data Quality & Compliance

With clean, organized, validated, and auditable data, your business remains risk-free from penalties and builds trust.

Scalability with Exponential Cost

PDI doesn’t charge or increase licensing costs; instead, it scales horizontally, making it more affordable than traditional per-connector or per-core usage.

Productive Gains

Business Intelligence teams have more time to analyze results than to code pipelines.

Supports for Growth & Transformation

PDI undertakes modernizing projects that ensure delivering digital initiatives at a higher value by integrating with cloud, AI/ML platforms, and big data.

Here is the strategic perspective for Executives:

- For CIOs and CTOs: PDI provides an open, adaptable solution that combines cloud, hybrid, and legacy environments, thereby lowering technology debt.

- For CFOs: It offers a definite return on investment in terms of compliance, productivity, and operational efficiency, and it reduces long-term costs in comparison to traditional ETL vendors.

- For CEOs and company owners: PDI gives the company the ability to use data as a strategic asset, giving it a competitive edge in markets that rely heavily on data.

Future of Enterprise ETL with PDI

The ETL operations are shifting towards real-time, cloud-native, and AI-ready architectures. PDI is evolving rapidly to meet these needs. For example:

- Cloud-first & hybrid: flexible deployments across multi-cloud, containerized environments, and on-premises.

- Real-time processing: integration with streaming platforms like Kafka for event-driven and continuous data flow.

- AI/ML enablement: preparing and cleansing data for advanced analytics by integrating into Python, R, and Spark.

- Automation & intelligence: shifting towards self-optimizing pipelines with predictive tracking and error recovery.

- Governance & compliance: Enhanced lineage tracking, role-based security, and audit trails for regulatory requirements.

How SPEC INDIA Helps Enterprises with Pentaho Data Integration?

Though Pentaho Data Integration (PDI) lays a robust foundation for businesses, it still requires experts to implement, personalize, and provide ongoing support. That’s where you will need a software development company with industry experience that helps unlock the potential of PDI.

Here is how we will help you maximize the potential of PDI:

End-to-End Implementation Services

- Gathering Requirements & Roadmapping: Our ETL developers will help align your business workflows with objectives, compliance, and digital transformation initiatives.

- Custom Deployment: They will also configure PDI in on-premises, cloud, or hybrid environments that are customized to enterprise infrastructure.

- Proof of Concepts & Pilot Projects: If you are even looking for a prototype to know what your end project looks like, we will build it rapidly.

Custom ETL Workflow Development

- We build scalable, reusable, and modular data pipelines for structured, semi-structured, and unstructured data.

- We even undertake optimizing workflows for performance, fault tolerance, and governance.

- Our ETL developers leverage metadata injection and advanced transformation to manage complex enterprise logic.

Integration with Modern Data Platforms

We bridge the gap between PDI and cloud warehouse. Additionally, we ensure that legacy ERP/CRM systems are integrated with modern BI and analytics platforms. We also assist our clients in preparing AI/ML-ready datasets for data science and predictive analytics projects.

Enterprise Support & Maintenance

- 24/7 tracking and troubleshooting to ensure pipeline dependency.

- Proactive performance tuning and scalability planning.

- Continuous upgrades, patches, and alignment with enterprise IT standards.

Training & Knowledge Transfer

- Our team will train your IT and BI teams so that they can adopt and scale PDI effectively.

- We also provide custom workshops that empower in-house teams with best practices, governance, and automation skills.

Transforming Educational Insights with a Custom BI Platform

Discover how our tailored Data Analytics and BI solution empowered an education enterprise to centralize data, automate reporting, and make data-driven decisions with ease.

Conclusion

Pentaho Date Integration is far beyond just an ETL tool, meaning it is a strategic enabler that ensures businesses receive unified data, advanced infrastructure, and accelerated analytics. With its scalability, cloud readiness, and support for AI/ML, PDI ensures the delivery of measurable ROI and positions your business for future growth and expansion.

At SPEC INDIA, our motto is to enable organizations to reach their maximum potential through tailored implementation, optimization, and ongoing support. We transform your fragmented data into an organized, governed, reliable, and insight-driven ecosystem.

Let’s connect and discuss the requirements further before initiating the project.

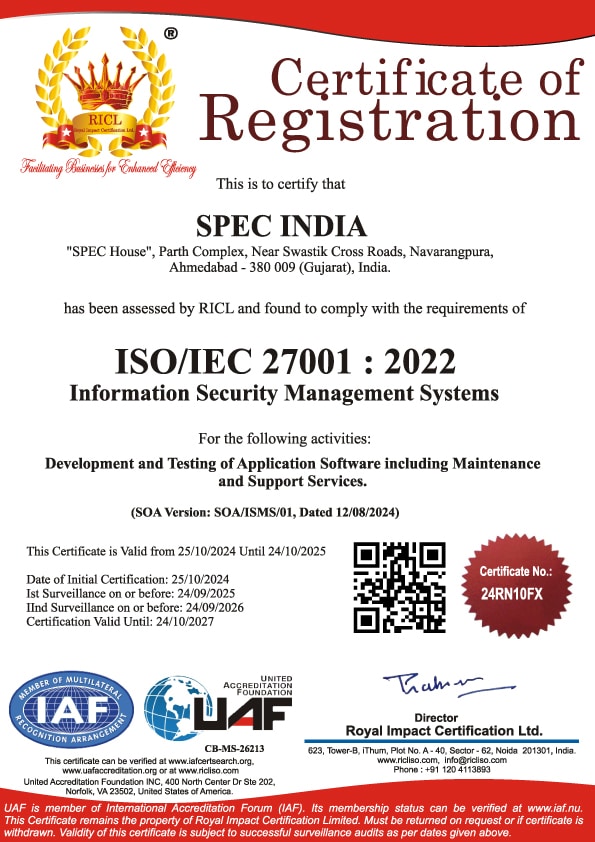

SPEC INDIA is your trusted partner for AI-driven software solutions, with proven expertise in digital transformation and innovative technology services. We deliver secure, reliable, and high-quality IT solutions to clients worldwide. As an ISO/IEC 27001:2022 certified company, we follow the highest standards for data security and quality. Our team applies proven project management methods, flexible engagement models, and modern infrastructure to deliver outstanding results. With skilled professionals and years of experience, we turn ideas into impactful solutions that drive business growth.