September 22, 2025

October 10th, 2025

Artificial Intelligence (AI) is making its way into the modern business environment to an extent. AI is being deployed in numerous applications such as answering customer questions, helping teams make better decisions, and many more. There are a lot of companies already using AI tools, in particular, more potent ones called LLMs (Large Language Models), such as ChatGPT or Google Gemini. Over the next three years, 92% of companies plan to increase their AI investments.

The vast majority of these tools are cloud-based, i.e., organizations move their data through the internet to access these tools. Though this is effective, it raises some concerns, such as data security, cost implications, and not being in full control of the application of the models.

This is why local LLMs are now seeing a large surge of interest: they are on-premises AI models that operate on their own computers or privately-owned server infrastructure, rather than requiring an internet connection. This provides them with greater privacy, quicker response, and gives them greater control.

In this blog, we will find out why local LLM appears to be a hit and why it might be the future of enterprise AI.

Local LLMs (Local Large Language Models) are artificially intelligent models that operate on your own personal computer systems or on a local server rather than the internet or a cloud-based system.

You may have heard of such AI tools as ChatGPT, MidJourney, and Gemini. These are cloud LLMs, so your data is uploaded and travels through the network to the servers of the company, and then the AI returns a response.

On the contrary, local LLMs leave everything on your side. The enterprise AI operates within the network of your own company- you do not need any internet connection to use it. That way, your data remains confidential, the answers are typically quicker, and AI is more under your control.

Most of the local large language models are open-source programs that allow companies to modify according to their preferences. Popular ones are LLaMA, Mistral, and Falcon.

Cloud LLMs are like borrowing a powerful tool from someone else. Local LLMs are like owning that tool and using it whenever and however you want.

So, what is the current situation with businesses adopting AI? Let us think about that. You may have observed that these tools may be ChatGPT or Google AI to write emails, summarize documents, or even provide customer support.

Here is the thing, though, most of these tools are cloud-based, which means that your data is sent to the server of another individual. And to a lot of companies, that already is a red flag. So, why are companies shifting toward local LLMs?

Let’s break it down together.

1. You Want to Keep Your Data Safe

Imagine having sensitive client data, financial records, or internal documents. Would you really want to send all that out to a third-party server? Probably not.

Local LLMs keep things in-house. None of your data is moved off your systems, so you stay out of trouble with privacy regulations such as GDPR or HIPAA.

2. Tired of Paying Every Time You Use AI?

Choosing to use cloud AI may seem simple, but eventually it may cost a lot of money because of those per-use fees. Each question, each answer- it has a price.

Local LLMs enable you to do things yourself. Yes, it costs upfront, but once it has been paid, you do not pay each time you press enter.

3. You Need Speed, Not Lag

Have you ever experienced waiting during a slow internet moment so that AI can respond? Frustrating, right? Local large language models run directly on your own machines, which means no delays due to internet issues. It’s fast, smooth, and reliable—perfect when time matters.

4. Your Business Isn’t Like Everyone Else’s

Cloud-based models are general-purpose- they are smart but not necessarily customized to your world. Small LLMs can train using your own data and fit exactly to your specific requirements. Whether you’re in healthcare, manufacturing, or finance, you get an AI that understands your business.

5. You Don’t Always Have Internet (And That’s Okay)

Not every location has great connectivity. And even if it does, sometimes you just need your tools to work offline.

With local LLMs, no internet? No problem. They keep working no matter where you are.

There are lots of businesses that have developed numerous ways of using local LLMs. They make companies operate more quickly, secure the data, and cut down the expenses. That’s why LLMs for enterprise play a crucial role in their day-to-day work. Below are some common use cases:

1. Customer Support

Customer inquiries can be automatically answered by having local LLMs. Customer information remains secure and confidential since all of it is utilized on the company-owned system. It is beneficial to organizations that need to safeguard confidential client data.

2. Document Summarization and Search

The company tends to possess a great number of documents, reports, contracts, emails, etc. Local large language models are able to summarize these documents in a reasonable time, locate key information or answers to a question based on what is contained in the document. It conserves time and makes employees work more effectively while maintaining AI data privacy enterprise standards.

3. AI Assistants for Employees

Local LLMs can help companies to develop intelligent assistants for their teams. Such assistants can answer any question related to the company policies, even providing training or direct access to official information. Through secure AI deployment, productivity is enhanced, and there is less needed to seek the assistance of others.

4. Real-Time Help in Manufacturing or Retail

Local LLMs can cooperate with machines and systems on-site (in industries such as manufacturing or retail). They can provide immediate feedback, identify errors sooner, and assist employees in making improved decisions-all without being online.

5. Helping Software Developers

Local LLMs can support tech teams by suggesting code, fixing errors, and writing simple explanations. The model can be trained using the company’s own tools and coding style, so the results are more useful. For an AI data privacy enterprise, such secure AI deployment ensures code and proprietary information stay within trusted systems.

These applications indicate that local LLMs may be applied to numerous business segments. Private AI for business is fast, safe, and controlled, which is relevant to the contemporary enterprise demands.

As more enterprise LLMs were developed, a multitude of tools and platforms have also been invented to support them. These tools will allow you to run, operate and customize LLMs easier with your own machines. These are some of the most common LLM tools and environments to help them go local:

Here are some popular tools and ecosystems that support local LLMs:

1. Hugging Face

Hugging Face is a well-known platform that includes a huge range of open-source LLMs. You can get models in downloadable form and tune them with your data and then run them on the local system.

It also provides tools like Transformers and Diffusers for working with different types of on-premises AI models

2. Ollama

Ollama is an easy-to-use tool allowing you to run large language models on your local machine through a few lines of code. It promotes models such as LLaMA 2, Mistral, etc. It is characterized as easy to handle and to configure, even without thorough technical expertise.

3. LM Studio

LM Studio is a local desktop program to download, execute, and talk with CLMs. It has a squeaky-clean interface and integrates Hugging Face and other model sources. It is fantastic to get to play around with new models without any code-writing.

4. LangChain

LangChain is a framework that enables developers to create programs using LLMs. It may be used off-cloud and locally. It contributes to such functionality as the ability to chain tasks, connect to databases, and use other tools such as search or memory.

5. LlamaIndex (formerly GPT Index)

LlamaIndex enables bridging the gap between your local large language models and your personal data. It simplifies the search and retrieval of information in documents, databases, or files. This can assist in the development of chatbots or search engines that run against your own data.

6. Efficient Models for Local Use

Multiple models are designed to perform effectively on local devices with less memory and power. Examples include:

These models are useful for companies that don’t have large servers but still want to use AI.

The tools and models are significantly simplifying the process of companies integrating local LLMs, meaning that they do not require huge budgets or significant technical expertise.

Local LLMs are quickly establishing themselves as the game-changer when it comes to companies that require the capability that AI has to offer without compromising on privacy, speed or control. Edge AI in business enables the organizations to be sure that their data is saved. It can reduce latencies and cloud costs, create more customized solutions by using their own infrastructure and deploying models.

As regulations become stricter and AI becomes more embedded in business workflows, local LLMs represent a safe, smart, and scalable solution for the future. Businesses that invest in local large language models (LLMs) today are not just following a trend—they are strengthening their enterprise AI development strategy and securing long-term success.

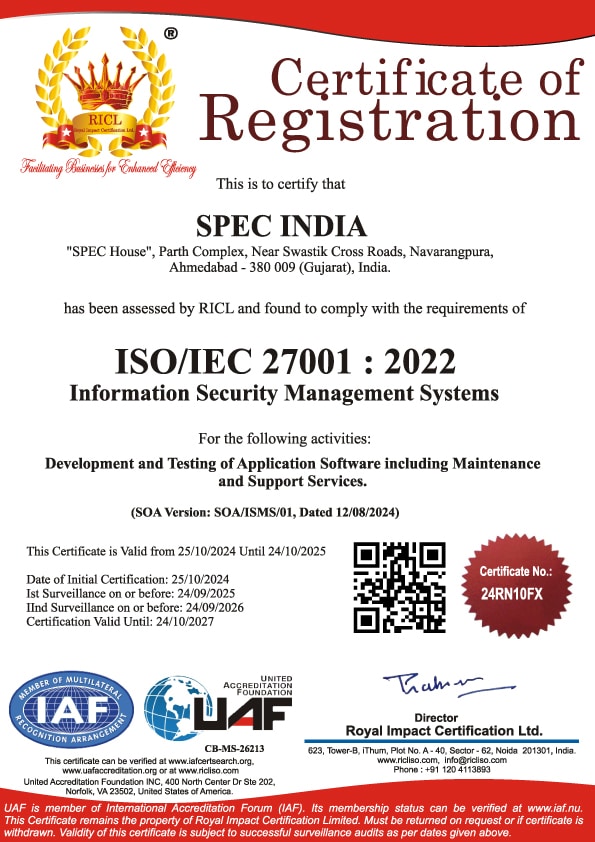

SPEC INDIA is your trusted partner for AI-driven software solutions, with proven expertise in digital transformation and innovative technology services. We deliver secure, reliable, and high-quality IT solutions to clients worldwide. As an ISO/IEC 27001:2022 certified company, we follow the highest standards for data security and quality. Our team applies proven project management methods, flexible engagement models, and modern infrastructure to deliver outstanding results. With skilled professionals and years of experience, we turn ideas into impactful solutions that drive business growth.

SPEC House, Parth Complex, Near Swastik Cross Roads, Navarangpura, Ahmedabad 380009, INDIA.

This website uses cookies to ensure you get the best experience on our website. Read Spec India’s Privacy Policy